Recent advances in AI technology promise to transform how software engineering teams build and maintain code. Intelligent code editing IDEs like Cursor now provide real-time coding assistance and debugging support using AI. While the ability to query an AI assistant in your browser via ChatGPT for help writing and fixing code is handy, the true power lies in how teams can systematically leverage these AI platforms to maintain consistent coding standards and principles across their codebase.

ScatterSpoke has been an Atlassian Marketplace partner since 2021. Our mission is to deliver actionable insights by integrating disparate engineering data. We’ve begun incorporating AI into our development workflow, allowing us to automatically enforce consistent coding standards and principles across our codebase. In this article, we’ll share the principles and prompts we’ve used to enforce code quality in our apps with AI.

Maintaining Coding Consistency with AI

Traditionally, software teams have relied on linting to enforce basic coding style guidelines and surface common issues. However, linting is limited to flagging superficial style violations – it does not ensure alignment on foundational design patterns, coding approaches or architecture.

Establishing shared development principles like DRY (Don’t Repeat Yourself) or setting guidelines on method signatures can have a much more significant impact on code quality and system design. For example, enforcing DRY principles eliminates duplicate code, makes the codebase more maintainable, and reduces the possibility of subtle bugs when copying code. Having method signature standards avoids confusion over inputs and outputs, and facilitates cleaner interfaces between components.

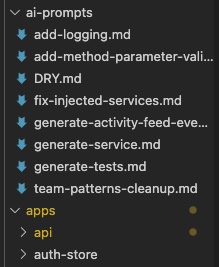

At ScatterSpoke, we’ve instituted an “ai-prompts” directory in our source control. When developers write new or update existing code, we run this Markdown file containing the coding principles and standards our team agreed on during one of our retrospectives. With a few keystrokes, developers can transform code to our established guidelines.

For example, we included the following prompt, which that was our v0 team agreement prompt:

# Cleanup Instructions

Follow these instructions to clean up this file

## Your Role

You are an expert typescript developer who has been hired to clean up this code.

## 1. **Documentation**

### Every Method Should Have a JSDoc

Having well-documented code is essential. This makes the codebase easier to maintain and understand.

```typescript

/**

- Calculates the sum of two numbers.

- @param {number} a The first number.

- @param {number} b The second number.

- @returns {number} The sum of a and b.

*/

function sum(a: number, b: number): number {

return a + b;

}

```

Also, check that the jsdoc and the method match, if they don't fix the comments

## 2. **Optimization**

### Use `Promise.all` for Concurrent Requests

Instead of waiting for promises sequentially, where possible, use `Promise.all` to make requests concurrently.

```typescript

const promise1 = fetch('/api/data1');

const promise2 = fetch('/api/data2');

Promise.all([promise1, promise2]).then(([response1, response2]) => {

// Handle responses here

});

```

### No unused imports

If you find any imports that not being utilized remove them from the file.

### Fix eslint rules

If you find any rules violations for the projects lint config, fix them.

## 3. **Typing**

### Avoid Using `any`

The whole point of TypeScript is to have strong, static typing. Avoid using `any` as it defeats this purpose. Always prefer to give a specific type or create an interface/type.

```typescript

interface User {

id: number;

name: string;

}

function getUser(): User {

// Fetch and return the user

}

```

## 4. **Add Logging**

Always import our logger located here

```

import { SpokeLogger } from '@spoke/common/util/SpokeLogger';

```

And instantiate a private logger with the service class name like this

```

private logger = new SpokeLogger(<SERVICE CLASS NAME>.name);

```

Then add logging to methods where deemed important, and use the different log levels in Spoke level for the right circumstances. We support the following log levels:

log(message: any, context?: string)

warn(message: any, context?: string)

debug(message: any, context?: string)

verbose(message: any, context?: string)

## 5. **DRY Principle**

### Do Not Repeat Yourself

Check for repetitive patterns and codes. Refactor them into functions, modules, or utilities.

```typescript

// Instead of:

let sum1 = a + b;

let sum2 = c + d;

// Do:

function add(x: number, y: number): number {

return x + y;

}

let sum1 = add(a, b);

let sum2 = add(c, d);

```

## 6. **SOLID Principles**

### Adhere to SOLID Principles

The SOLID principles are a set of design principles in object-oriented programming that, when followed properly, can lead to more understandable, flexible, and maintainable code.

1. **Single Responsibility Principle (SRP)**

A class or function should have only one reason to change. This promotes separation of concerns.

2. **Open/Closed Principle (OCP)**

Software entities should be open for extension but closed for modification. This encourages developers to extend existing code rather than modify it.

3. **Liskov Substitution Principle (LSP)**

Derived classes must be substitutable for their base classes. In TypeScript, this means when extending classes, the derived class should not alter the intentions of the base class.

4. **Interface Segregation Principle (ISP)**

No client should be forced to depend on interfaces they do not use. This means having specific interfaces for different use cases rather than a one-size-fits-all approach.

5. **Dependency Inversion Principle (DIP)**

High-level modules should not depend on low-level modules but should depend on abstractions. This can be achieved in TypeScript using interfaces.

When executed against code with repetitive logic or missing js docs, the AI assistant scans the code in focus, identifies issues based on the team agreement, and corrects them.

By committing these prompts into source control, we can easily ensureenforce that all code follows our guidelines. The AI helps us scale a consistent coding philosophy across the engineering team beyond linting or cyclomatic complexity rules.

Agreeing on team standards

The prompts are simple Markdown files we compose as a team after debates about what we care about most in our codebase.

AI prompts enforce objective standards, not subjective principles open to interpretation. Standards related to code structure, formatting, comments, logging, etc., work well. However, prompts around more conceptual ideas like “good design” are challenging to implement. The more specific you can be, the better.

We also have prompts for enforcing other standards like:

- Extracting complex if/else conditionals into well-named functions

- Adding JSDoc comments for functions lacking documentation

- Converting nested callbacks to async/await for promises

- Enforcing our design patterns, like the repository pattern

- Generating unit tests

Manual code reviews are still critical, but the AI prompts let us focus those reviews on architecture and design rather than nitpicking minor design differences. Our developers can spend more time on complex problem-solving by using AI to automate foundational coding patterns.

You can even automate these prompts via continuous integration by feeding your prompts folder to an AI CLI utility that checks the diffed files.

Conclusion

As an Atlassian Marketplace partner who has built multiple apps integrated with Jira, Confluence, and Bitbucket, we have seen firsthand at ScatterSpoke the challenge of bridging your codebase to third-party APIs where the data doesn’t always have the same shape as yours. Leveraging AI prompts to enforce coding standards across a team has allowed us to move faster, think bigger, and integrate third parties much more quickly than previously possible.