Back in July I published Encountering some turbulence on Bitbucket’s journey to a new platform, sharing with the public for the first time that Bitbucket Cloud is in the final stages of a migration from our data center onto Atlassian’s cloud platform—the same internal platform underlying Jira Cloud, Confluence Cloud, Statuspage, and many other internal services.

I also shared that because of increased file system latency as a result of this platform move, certain operations have become slower. Specifically, rendering diffs and merging pull requests both saw a measurable decline in performance after moving out of our data center.

I’m following up on that post to share many of the improvements we’ve implemented in both areas over the past month. TL;DR:

- In mid-July we shipped an optimization that improved diff response times by 40% and reduced the rate of timeouts by an order of magnitude.

- Over the past month we have deployed changes that have collectively improved end-to-end merge times by over 30 seconds during peak traffic.

Diff response times

In the July article I wrote this:

To optimize our diff and diffstat endpoints, our engineers implemented a solution where we would proactively generate diffs during pull request creation and cache them. Then upon viewing the pull request, they would see diff, diffstat, and conflict information all retrieved from the cache, bypassing the file system and avoiding increased latency.

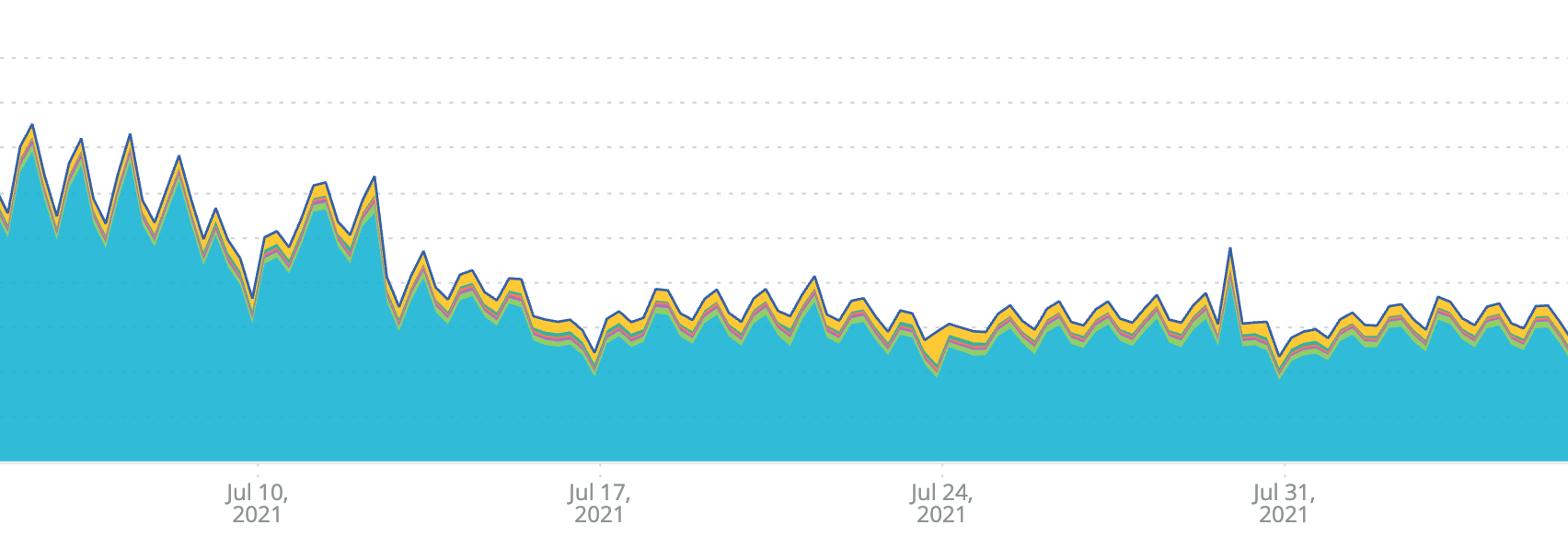

For a web service at Bitbucket’s scale, solving problems like this often doesn’t go the way you expect. While our engineers worked on resolving these issues, they discovered an optimization opportunity that would allow us to perform much more of the work required to generate the diff in local memory, significantly reducing file system I/O. We rolled out this change on July 13 and saw average response times for our diff and diffstat APIs plummet by nearly 40%:

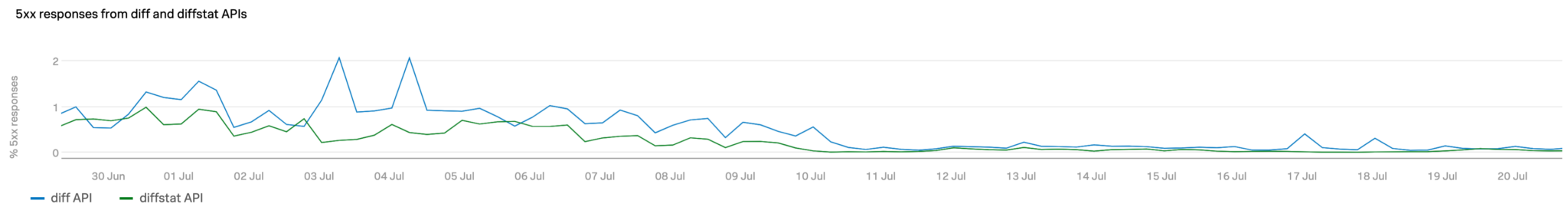

The graphs above depict average response times for these APIs. The improvement in the 90th and 99th percentiles was even more dramatic: the P99—in other words, the diffs that were taking the longest to render (in the top 1% of response times)—got over 150% faster. The impact of this is undeniable from looking at the rate of 5xx responses (predominantly timeouts) from these APIs, which dropped by an order of magnitude.

So what happened to the caching solution I described previously? That’s the best part: we haven’t enabled it yet! After shipping the above optimization, our diff times are now comparable to what they were before we left our data center. We will finish our caching work, which should yield further improvements to response times; but after realizing the above performance gain we redirected our short-term efforts to addressing the next major issue affecting customers: pull request merge times.

Pull request merge execution times

Early on, we introduced code to surgically run merges with low-level profiling enabled for specific customers and store detailed profile data for our engineers to review. This allowed us to identify hot spots to focus our optimization efforts, some of which are described below.

Examining pre- and post-receive hooks

One of the first areas we identified based on profile data to streamline merges was the set of hooks we run on the backend for every push, which includes pull request merges. Some of these hooks are critical as they handle enforcement of security features such as branch permissions and merge checks. However, from reviewing all the hooks we run, we identified some which could be removed from the synchronous flow of performing a merge to speed things up.

The following graphs all show worst-case scenarios for the improvements described. Bear in mind that Bitbucket processes many millions of Git operations every day, and the vast majority are far faster than this. We focused on resolving the pathological cases since we believed these to be the ones representing the most acute customer pain.

One hook that we noticed could in extreme cases take up to 50 seconds to execute was some cleanup code to detect and delete stale ref lock files. We removed this hook from synchronous processing speeding up some merges significantly.

Another, similar hook we found was the one responsible for packing refs on a percentage of pushes. This is a proactive measure to speed up certain features that require iterating over a repository’s refs, such as listing branches or pull requests. We moved this task into a background hook which runs asynchronously after the push completes, considerably speeding up some merges by a full minute or more.

There are other improvements we’ve made in this area—for example, we removed the hook responsible for printing links to open PRs to the terminal for merges initiated from the website, since no user would ever see these!—but the above two had the greatest impact.

Synchronizing operations by locking

Another source of slowness which I have written about before is the use of aggressive locking to synchronize certain operations that modify a repository in order to protect data consistency. The truth is that some of this locking dates back to the days when Bitbucket supported both Git and Mercurial. It was necessary for our customers using Mercurial, but Git has stronger built-in mechanisms for ensuring consistent data which we can leverage.

After refactoring our merge code to remove excessive locking, we measured the performance difference between old and new implementations and observed an improvement of up to 3 seconds.

Delayed processing of background tasks

While our engineers worked on identifying and implementing improvements such as the ones I’ve just described, in parallel we worked to establish better visibility in the hopes of identifying sources of slowness we might have been overlooking.

What we discovered was eye-opening. From reviewing detailed logs for some merge operations we had identified as particularly slow, we noticed that our system for scheduling and executing background tasks reported major discrepancies between the timestamps when tasks were received vs. when they were completed, which could not be explained by their runtime. During periods of peak traffic, this discrepancy reached pathological levels as can be seen in the graph below, capturing a period during which wall time exceeded 30s while task execution time remained only a few seconds.

On its face, this scenario did not seem entirely mysterious. Two straightforward explanations exist to account for the difference between execution time and wall time: tasks could be sitting in a queue, or they could be prefetched by workers and effectively delayed by other long-running tasks. However, our metrics indicated that queues were not backing up; and we configured our workers to disable prefetching.

Ultimately what we found, without getting into too much detail, is that a bug in the library our services use to schedule and execute background tasks was causing a large number of workers to sit idle without executing tasks. A master orchestrator process was still consuming from our queues fast enough to prevent a backlog of messages, but these tasks were being allocated to an artificially small pool of workers preventing effective parallelization. This bug had not affected our services running in the data center; it only started to affect things after our migration to our new cloud platform due to subtle differences in configuration.

Through extensive testing, we were able to identify changes to the configuration of the service responsible for performing pull request merges to work around this bug and restore the intended behavior. This has made a huge difference and we can now see wall times tracking much closer to task execution times, typically within < 1 second.

Crossing the finish line

I always intended to share a progress update this month, whether or not we had fully resolved the performance issues with diffs and merges. This article was edited multiple times as our outstanding engineering teams continued to find more optimization opportunities and deliver them. At this point the performance of Bitbucket is, overall, as good or better than it was in our data center.

What I’m excited about now is sharing one final update after we’ve completed our migration by moving the last batch of customer repositories into our cloud storage layer. This has already been done for roughly 85% of repositories including those on our most active storage volumes. By the end of this month, Bitbucket Cloud will truly be data center free and operating 100% on cloud infrastructure, which means vastly improved scalability, security, and much more.

In closing, I’d like to express gratitude all of you, our developer community, for continuing to use Bitbucket every day. Empowering teams to collaborate and build, test, and deliver high-quality software is why we do what we do. So thank you, and stay tuned for more exciting updates to come.