Compass を無料で試す

開発者のエクスペリエンスを向上させ、すべてのサービスをカタログ化し、ソフトウェアの健全性を高めましょう。

記事

チュートリアル

インタラクティブ ガイド

運用準備へのアトラシアンの対応

信頼性、セキュリティ、コンプライアンスを促進する運用準備のベスト プラクティスをご覧ください

.png?cdnVersion=3162)

Warren Marusiak

シニア テクニカル エバンジェリスト

DevOps など最新のプロジェクト構造を採用していても、多くのプロジェクトには重要な計画手順、つまり準備が整っているかどうかを自動で評価するプロセスが欠けています。運用準備を行わないと、新しいシステムや製品に対応する環境が整っているかどうかが分かりません。しかし、運用準備はデプロイ直前に行うものではありません。プロジェクトの要件と仕様の作成後、早い段階で運用準備を行うことが重要です。

運用準備とは何か?

運用準備とは、サービスを本番環境にデプロイする前に開発チームが達成すべき一連の要件です。要件は開発を始める前にチームが設定し、サービスを本番環境にデプロイする前に達成する必要があります。運用準備の要件により、チームは初日から信頼性、セキュリティ、コンプライアンスについて考える必要が生じます。これらの要件に早い段階から取り組むことで、チームはサービスを開始した後に顧客に影響を及ぼす問題が発生しないようにすることができます。

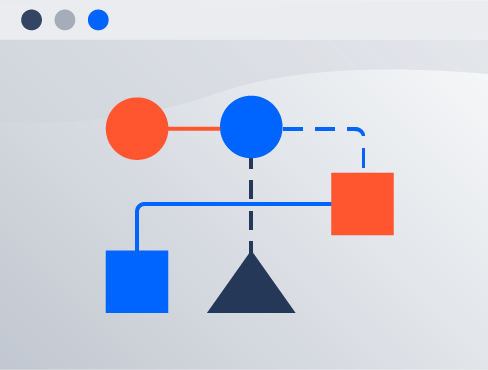

運用準備では、3 つの要素を定義する必要があります。チームはまず、サービス階層を定義しなければなりません。次に、サービス レベル アグリーメントを定義しなければなりません。最後に、運用準備要件を定義しなければなりません。各サービス階層にはサービス レベル アグリーメントと 1 つ以上の運用準備要件があります。新しいサービスが作成されると、サービス階層が割り当てられます。サービス階層のサービス レベル アグリーメントには、可用性、信頼性、データ損失、およびサービス復旧の要件が定められています。サービスを本番稼働するには、運用準備の要件をすべて達成せねばなりません。

関連資料

DevOps とは何か?

関連資料

DevOps の手順

アトラシアン独自の運用準備プロセスの詳細については、以下をご参照ください。これは、チームが独自の運用準備プロセスを構築するのに役立ちます。ただし、組織ごとに作業と環境に基づいて運用準備手順を調整する必要があります。

サービス階層を定義する

サービス階層を使用すると、サービスを理解しやすいバケットにグループ化することができます。各サービス階層に、SLA と運用準備要件を設定します。SLA と運用準備要件は、その階層内のサービスがどのようなシナリオで使用されるかに基づいて決定します。サービス階層は重要度のバケットと考えることができます。特定のバケットに含まれるすべてのサービスは重要度が等しく、同じように扱われる必要があります。重要な顧客対応サービスのバケットには、従業員のみに影響する三次サービスのバケットよりも厳しい要件が存在する可能性が高いです。

次のサービス階層の例は、アトラシアンのサービス階層に基づいています。

- 階層 0: すべてが依存する重要なコンポーネント

- 階層 1: 製品および顧客対応サービス

- 階層 2: ビジネス システム

- 階層 3: 内部ツール

階層 0: 重要な基幹インフラ

階層 0 のサービスは、階層 1 のサービスが機能するために依存するサポート インフラストラクチャとシェアード サービスのコンポーネントを提供します。次のいずれかに当てはまる場合、コンポーネントは重要とみなされます。

- 階層 1 のサービスを実行したり、ユーザーがそれらのサービスにアクセスしたりするために必要である

- 顧客が階層 1 のサービスにサインアップするために必要である

- スタッフが次のような階層 1 のサービスの主要な運用機能をサポートまたは実行するために必要である

- サービスの開始/停止/再起動

- デプロイ、アップグレード、ロールバック、またはホットフィックスの実行

- 現在の状態の判断 (アップ中/ダウン中/性能低下中)

階層 1: 必要不可欠なサービス

階層 1 のサービスは、ビジネス、顧客、または製品の重要な機能を提供します。これらは、顧客対応サービスまたはビジネス クリティカルな社内サービスです。サービスが低下したり利用できなくなったりすると、会社は損失を被り、重要なビジネス機能が実行できなくなり、顧客向けのコア機能が実行できなくなります。階層 1 のサービスには、24 時間 365 日体制のサポート当番表、主要な指標に対する高い SLA、および厳しい本番稼働要件が必要です。

階層 2: コア以外のサービス

階層 2 のサービスは、コア機能の一部ではない顧客対応サービスを提供します。階層 2 のサービスは、オプションまたは「あれば便利」と考えられる付加価値や追加のユーザー エクスペリエンスを提供します。

階層 2 のサービスには、公開されている企業 Web サイトなど、主にマーケティング目的で機能する公開サービスが含まれます。これらは、ビジネスグレードの顧客対応サービスや、チームがそれぞれの役割を遂行するために使用する内部サービス (コラボレーション ツールや作業の追跡など) を提供しません。

階層 2 のサービスでは、24 時間 365 日体制のサポート当番表が必要な場合とそうでない場合があり、SLA は低め、本番稼働要件は少なめです。

階層 3: 社内専用または重要度の低い機能

階層 3 のサービスは、会社の内部機能または実験的なベータ サービスを提供します。このクラスには、現在アーリー アダプター向けの実験的な機能が含まれていることもあります。このような機能では、ベータ期間中にサービスの品質が低下することが予想されます。このレベルでは、ベストエフォートのみでサポートされるサービスに対して低い SLA バケットを提供します。

サービス階層の SLA を定義する

サービス レベル アグリーメント (SLA) には、可用性と信頼性の目標と、サービス中断イベントへの応答時間が定義されています。サービス階層ごとにサービス レベル アグリーメントがあります。次の表は、この記事で定義されている 4 つのサービス階層のそれぞれのサービス レベル アグリーメントの例を示しています。

各サービス レベルの SLA | 階層 0 | 階層 1 | 階層 2 | 階層 3 |

|---|---|---|---|---|

| 評価基準名 | 階層 0 サービス レベル | |||

| 階層 0 階層 0 | 階層 1 階層 1 | 階層 2 階層 2 | 階層 3 階層 3 | |

| 可用性 | 階層 0 99.99 | 階層 1 99.95 | 階層 2 99.90 | 階層 3 99.00 |

| 信頼性 | 階層 0 99.99 | 階層 1 99.95 | 階層 2 99.90 | 階層 3 99.00 |

| データ損失 (RPO) | 階層 0 1 時間未満 | 階層 1 1 時間未満 | 階層 2 8 時間未満 | 階層 3 24 時間未満 |

| サービスの復旧 (RTO) | 階層 0 4 時間未満 | 階層 1 6 時間未満 | 階層 2 24 時間未満 | 階層 3 72 時間未満 |

可用性 | | | |

|---|---|---|---|

| 階層 0 | 階層 1 | 階層 2 | 階層 3 |

| 週に最大 1 分のダウンタイム。月に最大 4 分のダウンタイム。 | 週に最大 5 分のダウンタイム。月に最大 20 分のダウンタイム。 | 週に最大 10 分のダウンタイム。1 か月あたり最大 40 分のダウンタイム。 | 週に最大 1 時間 40 分のダウンタイム。1 か月あたり最大 6 時間 40 分のダウンタイム。 |

信頼性 | | | |

|---|---|---|---|

| 階層 0 | 階層 1 | 階層 2 | 階層 3 |

| 10,000 件のリクエストに最大 1 件の失敗 | 2,000 件のリクエストに最大 1 件の失敗 | 1,000 件のリクエストに最大 1 件の失敗 | 100 件のリクエストに最大 1 件の失敗 |

データ損失 (RPO)

この数字は、サービスに障害が発生した場合に失われるデータの最大量を表しています。階層 0 のサービスでは、サービスに障害が発生した場合に失われるデータは 1 時間分未満です。

サービスの復旧 (RTO)

この数字は、サービスが復旧して稼働するまでの最大時間を表しています。階層 0 のサービスは 4 時間以内に完全に復旧します。

運用準備状況チェックを定義する

運用準備状況チェックは、特定のサービス品質に関する合否テストです。これは、サービスの機能性ではなく、サービスの可用性、信頼性、復旧力に関係しています。チームは、運用準備が整っているかどうかを判断するために使用する一連の運用準備チェックを定義する必要があります。これらのチェックはすべてのサービス階層に関係するわけではありません。一部のチェックは特定のサービス階層にのみ関係します。階層 0 のサービスには、階層 3 のサービスよりも厳しい要件が適用されます。次のセクションに、出発点として使用できる運用準備状況チェックの例を示します。

バックアップ

サービスが中断した場合、バックアップを使用してデータを特定の時点に復元する必要が生じることがあります。定期的にデータをバックアップし、復元プロセスを導入し、バックアップと復元のプロセスを定期的にテストすることが重要です。バックアップと復元プロセスは信頼性が高く効果的である必要があります。ここでは文書化とテストが重要です。

「完了」の定義

- バックアップと復元のプロセスを導入する

- バックアップと復元のプロセスを文書化してテストする

- バックアップと復元のプロセスを定期的にテストする

キャパシティ管理

このサービスによって提供される能力を明確に説明します。特に、消費者に課される制限を明確にします。パフォーマンス テストを実施して、サービスが期待される限度内で動作することを確認します。

以下に、テストして消費者に公開すべき情報の例をいくつか挙げます。

- サービスは 1 秒あたり X 要件に制限されている

- サービスは X の応答時間を保証する

- サービスの X 機能は地域を越えて複製されるかどうか

- 消費者は X をするべきではない

- サービスに過負荷をかける

- X より大きいファイルをアップロードする

「完了」の定義

- サービスの制限が特定され、文書化されている

- 制限が守られていることを確認するためにパフォーマンス テストが実施されている

顧客の認識

サポータビリティは、信頼性や使いやすさと並んで、サービス品質の重要な側面です。サービスまたはサービスの新機能が稼働する前に、サポート プロセスを構築する必要があります。サポータビリティには、カスタマー サポート プロセス、変更管理プロセス、サポート ランブックなど、サポートに焦点を当てた項目が含まれます。

カスタマー サポート プロセス

開発者は、顧客がサポート チームに連絡してサポートを依頼するとどうなるかを理解し、サポート プロセスに関する自分の責任を理解する必要があります。例えば、オンコール ローテーションに参加したり、本番稼働で問題が発生したときに対処するよう依頼されたりするなどです。

変更管理プロセス

すべての変更が同じように顧客に影響するわけではありません。顧客が気づかない変更もあります。例えば、小さなバグ修正などです。API の完全な書き換えなど、採用するためには顧客の多大な努力が必要になる変更もあります。変更管理は、変更が顧客に与える影響の大きさを評価するのに役立ちます。

サポート ランブック

ランブックには、サービスがどのように機能するかについての概要と、発生する可能性のある問題とその解決方法の詳細な説明が記載されています。サービスは時間とともに変化するため、定期的にランブックを更新し、文書化されたサポート手順が正確であることを確認することが重要です。

「完了」の定義

- サポート チームが問題を調査するために必要とするほとんどの質問に答えるドキュメント

- 有効な変更管理プロセス

ディザスタ リカバリ

災害の一部は、利用可能ゾーンの喪失です。サービスには、利用可能ゾーンに障害が発生した場合に正常に動作する十分な復旧力が必要です。ディザスタ リカバリには 2 つの要素があります。1 つ目は、災害復旧プロセスを作成して文書化すること、もう 1 つは、文書化されたプロセスを継続的にテストすることです。ディザスタ リカバリは定期的にテストする必要があります。文書化されたディザスタ リカバリ計画を使用して、利用可能ゾーンの障害に対処する能力をテストしましょう。

「完了」の定義

- DR 計画が文書化されている

- DR 計画がテスト済みである

- DR 計画の定期的なテストがスケジュールされている

ログ作成

ログは、異常の検出、サービス停止中または停止後の調査、一意の識別子を使用してサービス間の関連イベントを結び付けることによる悪意のある活動の追跡など、さまざまな理由で役立ちます。ログにはたくさんの種類があります。以下に、多くのサービスに含めるべき便利なログをご紹介します。

- アクセス ログ

- エラー ログ

「完了」の定義

- 適切なログが生成される

- ログが、簡単に見つけて検索できる場所に保管される

論理アクセス チェック

論理アクセス チェックは、内部ユーザー アクセス、外部ユーザー アクセス、サービス間アクセス、データ暗号化の管理方法に焦点を当てています。論理アクセス チェックによってどのように機能やデータへの不正アクセスを防げるのでしょうか? ユーザーの権限はどのように定義、検証、更新、廃止されるのでしょうか? これらの管理によって機密データを十分に保護できるでしょうか?

内部ユーザー

答えるべき重要な質問: 内部ユーザーはどのように認証されるのでしょうか? アクセス権はどのように付与/提供されるのでしょうか? どうやって削除されるのでしょうか? 権限のエスカレーションはどのように機能するのでしょうか? 定期的なアクセス評価と監査のプロセスはどのようなものでしょうか?

外部ユーザー

顧客の認証はどのように処理されるのでしょうか? アクセス権はどのように付与/提供されるのでしょうか? どうやって削除されるのでしょうか? 権限のエスカレーションはどのように機能するのでしょうか? 定期的なアクセス評価と監査のプロセスはどのようなものでしょうか?

サービス間

これは内部ユーザーと外部ユーザーの場合に似ています。サービスをどのように相互に認証するかを決定する必要があります。例えば、OAuth 2.0 を設定します。

暗号化

チームは、保管中と転送中のデータを暗号化しようと考えるでしょう。サービスでデータの暗号化がどのように管理されているかを説明しましょう。チームはどのように鍵を管理していますか? 鍵のローテーション ポリシーはどうなっていますか?

「完了」の定義

- 論理アクセス チェックが、内部ユーザー、外部ユーザー、およびサービス間向けに文書化され、実装され、テストされている

- 保管中のデータが暗号化されている

- 転送中のデータが暗号化されている

- 暗号化が実装され、テストされている

リリースする

新しいバージョンのサービスをデプロイするときは、サービスの SLA で定義されている範囲を超えて顧客のトラフィックが中断されてはなりません。すべての変更は、CI/CD パイプラインを介してピア レビュー、テスト、デプロイを行う必要があります。デプロイが終わるたびに、デプロイが成功し、機能に支障がないことを確認しましょう。デプロイ後に自動検証を行うのが好ましいです。デプロイをテストできるように、テスト、ステージング、運用前、本番環境などの複数の環境を用意しましょう。

「完了」の定義

- サービスがゼロ ダウンタイム デプロイである

- 本番環境に移行する前にサービスをデプロイしてテストする環境がある

セキュリティ チェックリスト

セキュリティ チェックリストは、安全なインフラストラクチャとソフトウェアを開発、維持するための一連のプラクティスと基準です。これらの基準によってプライバシー侵害やデータ侵害の可能性が減り、ひいては顧客の信頼が高まります。構築しようとしているサービスの性質を考慮したセキュリティ チェックリストを作成する必要があります。以下に、要件の例を示します。

「完了」の定義

- サービスに未解決の重大な脆弱性や高度の脆弱性が存在しないという証拠

- あらゆるデータストアでの保管時に暗号化される

- このサービスが安全でない HTTP 接続を許可していないという証拠

サービス指標

サービス指標によって、サービスに関する重要な健全性および診断情報が提供され、インシデントを監視して対処できるようになります。まず、監視する一連の指標をサービスごとに定義します。次に、Datadog や New Relic などのツールでこれらの指標を含むダッシュボードを作成します。指標が範囲外になるとアラームを発し、問題のチケットを生成します。

「完了」の定義

以下に、測定する項目の例を挙げます。

- レイテンシ: リクエストの処理にかかった時間

- トラフィック: 外部ユーザーによるサービスへの負荷

- エラー: ユーザーに影響を与えたエラーや失敗の数

- サチュレーション: サービスの混雑状況と処理できる量

- リソース使用量: CPU、メモリ、ディスク

- キュー、タイミング、フローなどのアプリケーション内部

- サービスの使用状況とコア機能: アクティブ ユーザー数、1 分あたりのアクション数

サービスの復元力

サービスの復元力に関する要件は、サービスがさまざまなコンポーネントの負荷の変化や障害に対処できるかどうかを決定します。復元力のあるサービスは自動スケーリングが可能で、単一ノード障害に対処できます。

自動スケーリング

サービスに自動スケーリング機能がある場合は、自動スケーリングが適切に設定され、テストされていることを確認します。どの指標で自動スケーリングをトリガーするかを決定し、その動作が機能することをテストします。例えば、データをディスクに保存する必要がある場合、ディスクの空き容量の割合を監視し、空き容量の割合がしきい値を下回るとストレージを追加することで自動スケーリングを行います。

単一ノード障害テスト

単一ノード障害に対処できるサービスであることが望ましいです。1 つのサービス ノードが停止した場合、キャパシティが減ることはあっても、サービスは引き続き機能します。サービス内の少なくとも 1 つのノードを停止させてこれをテストし、システムの動作を観察しましょう。サービスは、単一ノード障害に対処できることが期待されています。単一ノード障害をシミュレートする環境を監視する必要があります。

「完了」の定義

- 自動スケーリングの実装とテストの証拠

- 本番環境および/またはステージング環境が複数ノードで実行されていることの証拠

- サービスが単一ノード障害に対して復元力があることの証拠

サポート

サポートとは、リリース後にサービスをサポートするプロセスです。運用開始前にランブック、運用ツール、オンコール ローテーションを用意し、作業することで、問題が発生したサービスで問題修正プロセスを利用できるようにする必要があります。

ランブック

ランブックは、迅速にインシデントに対応して修復できるようにするために必要な背景情報と指示をオンコール対応者に提供します。

運用ツール

十分な標準でサービスを運用するということは、オンコール当番表があり、サービスに問題が発生したときにオンコールで警告するように Opsgenie のような運用ツールが設定されているということです。

オンコール

階層 2 と階層 3 のサービスの場合 - オンコール当番表が必要

階層 1 と階層 0 のサービスの場合 - 24 時間 365 日体制のオンコール当番表が必要

「完了」の定義

- ランブックが作成され、サポート担当者がすぐにそれを見つけられる

- 運用ツールが構成され、テストされている

- オンコール ローテーションが実施されており、問題が発生した場合は通知される

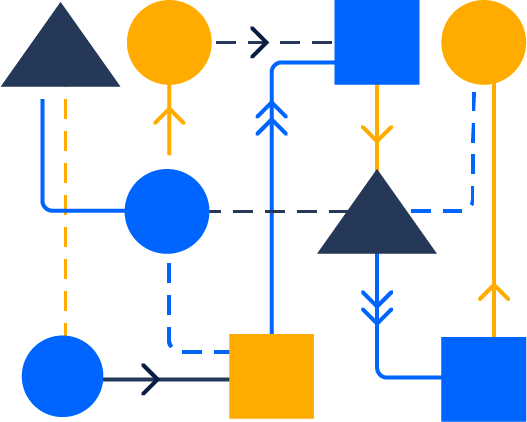

サービス階層の運用準備状況チェックを定義する

一連の運用準備要件を定義したら、各サービス階層に適した運用準備要件を決定する必要があります。運用準備要件の中には、すべてのサービス階層に適しているものもあれば、階層 0 のサービスにのみ適しているものもあります。一番下のサービス階層から始めて、徐々に上の階層に要件を追加していきます。階層 3 のサービスには基本的な運用準備要件がいくつかあるだけかもしれませんが、階層 0 のサービスにはすべての運用準備要件が必要です。

階層 3 に推奨される運用準備状況チェック

- バックアップ

- ログ作成

- リリースする

- セキュリティ チェックリスト

- サービス指標

- サポート

まず、階層 3 のサービスに最も基本的な運用準備要件を設定しましょう。

階層 2 と階層 1 に推奨される運用準備状況チェック

- バックアップ

- ディザスタ リカバリ

- ログ作成

- リリースする

- セキュリティ チェックリスト

- サービス指標

- サービスの復元力

- サポート

階層 2 と階層 1 のサービスには、ディザスタ リカバリとサービス復元力の運用準備要件が追加されます。階層 2 と階層 1 のサービスでは運用準備要件が異なる可能性があるので、ご注意ください。必ずしも階層ごとに要件を変える必要はありません。特定のサービス階層で別の運用準備要件が必要と思われる場合は、それを追加してください。チームのニーズによっては、階層 2 と階層 1 で要件が異なる場合があります。

階層 0 に推奨される運用準備状況チェック

- バックアップ

- キャパシティ管理

- 顧客の認識

- ディザスタ リカバリ

- ログ作成

- 論理アクセス チェック

- リリースする

- セキュリティ チェックリスト

- サービス指標

- サービスの復元力

- サポート

階層 0 のサービスには、キャパシティ管理、顧客認識、論理的アクセス チェックが追加されます。

運用準備の使用方法

サービス階層、サービス レベル アグリーメント、運用準備要件を定義した後、新しいサービスをそれぞれサービス階層に割り当てて、運用準備要件を達成するようサービスを開発します。このプロセスにより、特定のサービス階層のすべてのサービスが、本番稼働前に同じ基準に達していることが保証されます。

運用準備要件は固定的なものではなく、チームの要件が変わるとそれに伴って更新されます。新しい要件に合わせて既存のサービスを変更するには、作業項目を使用できます。ビジネス ニーズによっては、新しい要件に合わせて既存のサービスを変更しないようにすることも可能です。

運用準備状況インジケーター

運用準備要件を検証するには、自動化を設定すると便利です。自動検証を使用すると、各サービスの運用準備要件のチェックリストを簡単に作成できます。運用準備要件が達成されると自動的にチェックが付きます。運用準備要件のいずれかが達成されていない場合、運用準備状況インジケーターは赤になっています。すべての要件が達成されると、生産準備状況インジケーターは緑になります。

運用準備状況インジケーターは、特定のサービスのメイン ランディング ページやその他の便利な場所に表示します。運用準備状況インジケーターに適した場所には、Compass スコアカードなどがあります。サービスの Compass スコアカードに運用準備状況インジケーターを追加すると、この情報を簡単に見つけられ、ベスト プラクティスを実施し、改善が必要な分野を特定するための枠組みを用意できます。

結論

運用準備プロセスの策定には時間がかかります。まず、サービス階層とサービス レベル アグリーメントを定義します。次に、運用準備要件を定義し、各サービス階層に適用される要件を決定します。基本的な枠組みが用意できれば、新しいサービスを作成するときに標準の開発プロセスの一環として運用準備要件を達成できます。そして、運用準備状況インジケーターが緑になれば、サービスを本番環境に移行する準備ができているという確信を持つことができます。

その他のリンク

この記事で取り上げたトピックの追加情報については、以下のリンクをご覧ください。

この記事を共有する

次のトピック

おすすめコンテンツ

次のリソースをブックマークして、DevOps チームのタイプに関する詳細や、アトラシアンの DevOps についての継続的な更新をご覧ください。

DevOps コミュニティ

DevOps ラーニング パス